https://en.wikipedia.org/wiki/Winograd_schema_challenge

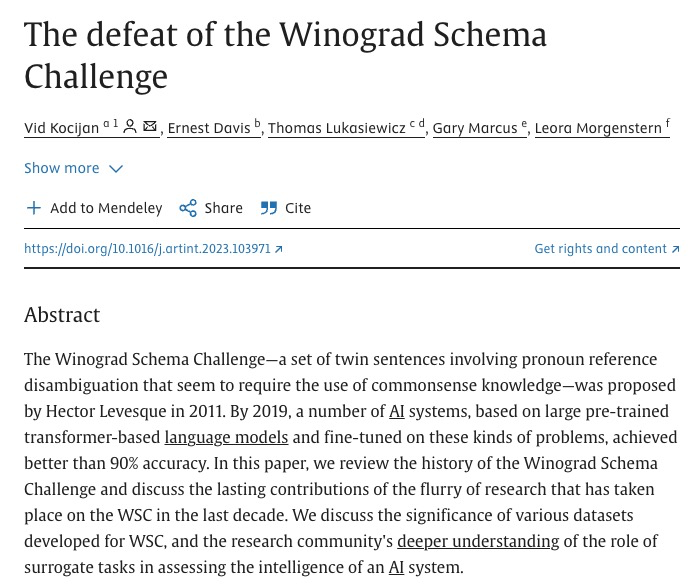

Resolves positivly if a computer program exists that can solve Winograd schemas as well as an educated, fluent-in-English human can.

Press releases making such a claim do not count; the system must be subjected to adversarial testing and succeed.

(Failures on sentences that a human would also consider ambiguous will not prevent this market from resolving positivly.)

/IsaacKing/will-ai-pass-the-winograd-schema-ch

/IsaacKing/will-ai-pass-the-winograd-schema-ch-1d7f8b4ad30e

/IsaacKing/will-ai-pass-the-winograd-schema-ch-35f9dca7fa7d

/IsaacKing/will-ai-pass-the-winograd-schema-ch-d574a4067e75

Update 2025-12-09 (PST) (AI summary of creator comment): Current performance benchmarks:

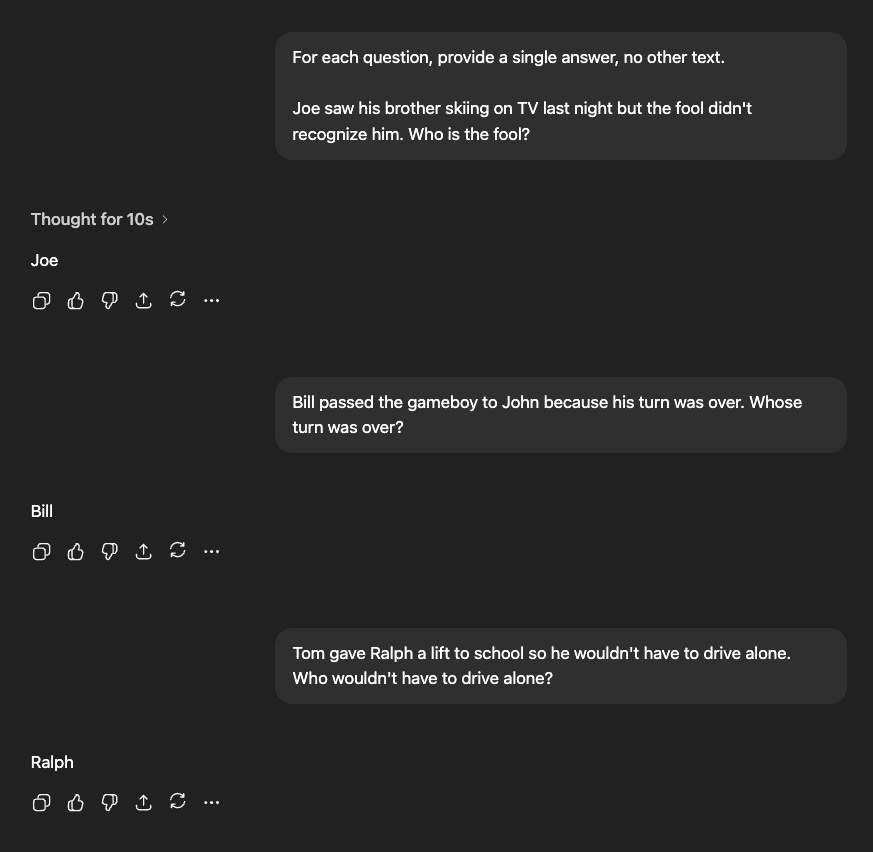

GPT-4: 87.5% accuracy

Human baseline: 94% accuracy

For reference, see the leaderboard mentioned in the creator's comment.

🏅 Top traders

| # | Name | Total profit |

|---|---|---|

| 1 | Ṁ648 | |

| 2 | Ṁ350 | |

| 3 | Ṁ89 | |

| 4 | Ṁ55 | |

| 5 | Ṁ44 |

People are also trading

That paper is from 2021, so it seems likely to me that a newer thinking model that's designed specifically for this sort of problem could break 94%. But I can't find any evidence of this actually having happened, and general-purpose thinking models do not seem capable of this. (Not to mention that developments this year don't count, this market ended at the end of 2024.) So I'm resolving NO.

@IsaacKing how would you figure out whether this market resolves YES? if you want to give some ai like claude newsonnet a few winograd schemas, it's clear it can solve them correctly