Elon Musk has been very explicit in promising a robotaxi launch in Austin in June with unsupervised full self-driving (FSD). We'll give him some leeway on the timing and say this counts as a YES if it happens by the end of August.

As of April 2025, Tesla seems to be testing this with employees and with supervised FSD and doubling down on the public Austin launch.

PS: A big monkey wrench no one anticipated when we created this market is how to treat the passenger-seat safety monitors. See FAQ9 for how we're trying to handle that in a principled way. Tesla is very polarizing and I know it's "obvious" to one side that safety monitors = "supervised" and that it's equally obvious to the other side that the driver's seat being empty is what matters. I can't emphasize enough how not obvious any of this is. At least so far, speaking now in August 2025.

FAQ

1. Does it have to be a public launch?

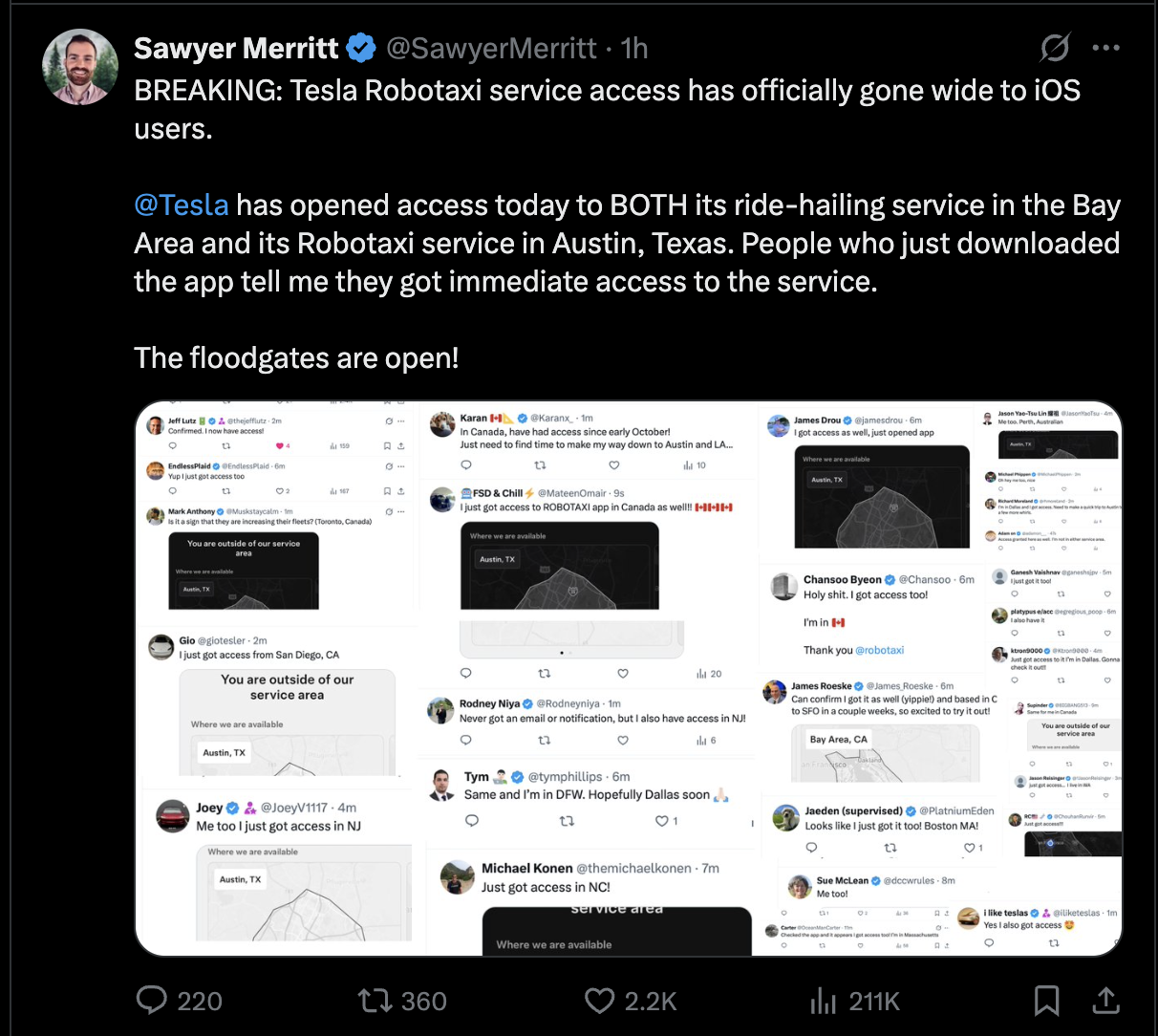

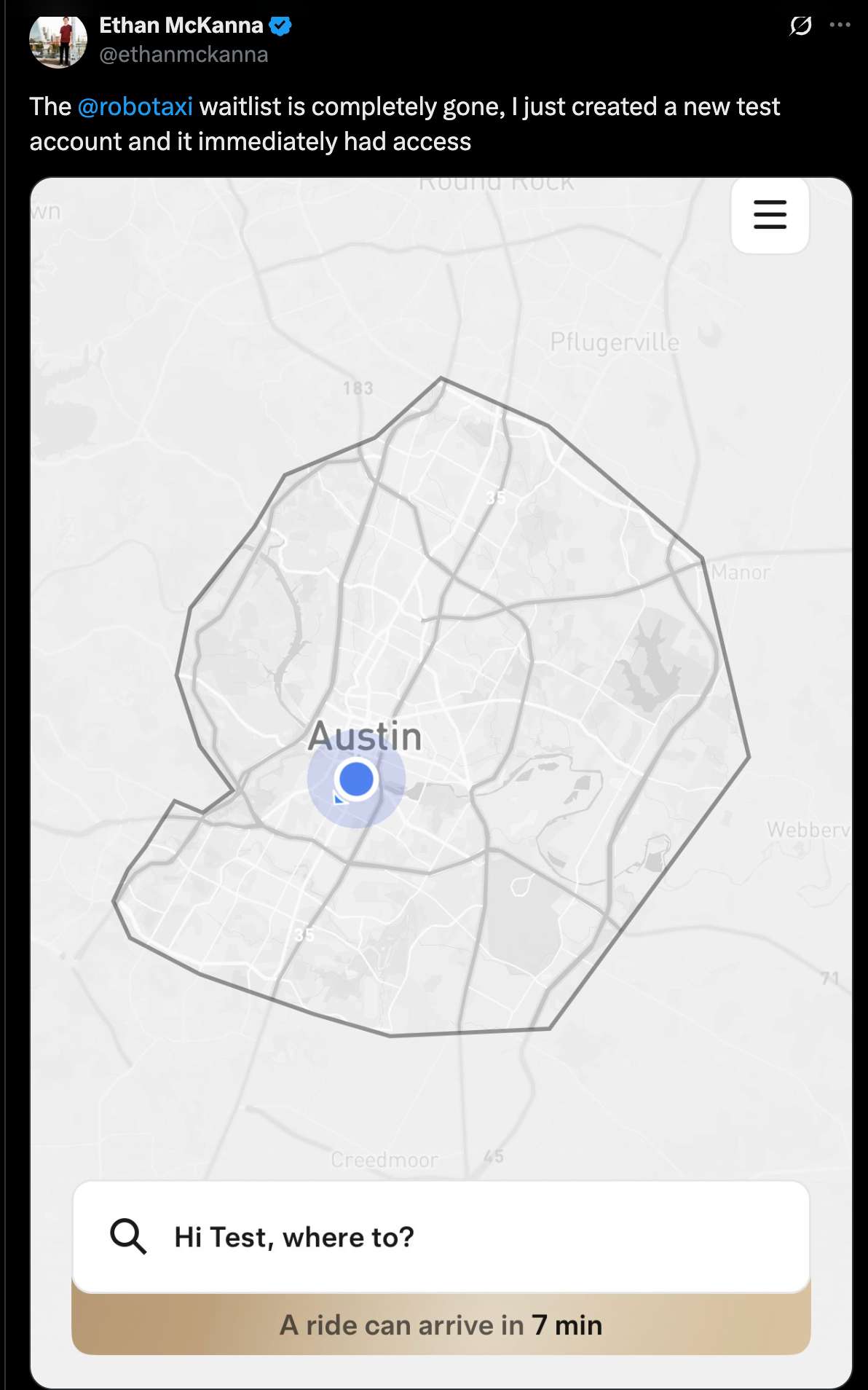

Yes, but we won't quibble about waitlists. As long as even 10 non-handpicked members of the public have used the service by the end of August, that's a YES. Also if there's a waitlist, anyone has to be able to get on it and there has to be intent to scale up. In other words, Tesla robotaxis have to be actually becoming a thing, with summer 2025 as when it started.

If it's invite-only and Tesla is hand-picking people, that's not a public launch. If it's viral-style invites with exponential growth from the start, that's likely to be within the spirit of a public launch.

A potential litmus test is whether serious journalists and Tesla haters end up able to try the service.

UPDATE: We're deeming this to be satisfied.

2. What if there's a human backup driver in the driver's seat?

This importantly does not count. That's supervised FSD.

3. But what if the backup driver never actually intervenes?

Compare to Waymo, which goes millions of miles between [injury-causing] incidents. If there's a backup driver we're going to presume that it's because interventions are still needed, even if rarely.

4. What if it's only available for certain fixed routes?

That would resolve NO. It has to be available on unrestricted public roads [restrictions like no highways is ok] and you have to be able to choose an arbitrary destination. I.e., it has to count as a taxi service.

5. What if it's only available in a certain neighborhood?

This we'll allow. It just has to be a big enough neighborhood that it makes sense to use a taxi. Basically anything that isn't a drastic restriction of the environment.

6. What if they drop the robotaxi part but roll out unsupervised FSD to Tesla owners?

This is unlikely but if this were level 4+ autonomy where you could send your car by itself to pick up a friend, we'd call that a YES per the spirit of the question.

7. What about level 3 autonomy?

Level 3 means you don't have to actively supervise the driving (like you can read a book in the driver's seat) as long as you're available to immediately take over when the car beeps at you. This would be tantalizingly close and a very big deal but is ultimately a NO. My reason to be picky about this is that a big part of the spirit of the question is whether Tesla will catch up to Waymo, technologically if not in scale at first.

8. What about tele-operation?

The short answer is that that's not level 4 autonomy so that would resolve NO for this market. This is a common misconception about Waymo's phone-a-human feature. It's not remotely (ha) like a human with a VR headset steering and braking. If that ever happened it would count as a disengagement and have to be reported. See Waymo's blog post with examples and screencaps of the cars needing remote assistance.

To get technical about the boundary between a remote human giving guidance to the car vs remotely operating it, grep "remote assistance" in Waymo's advice letter filed with the California Public Utilities Commission last month. Excerpt:

The Waymo AV [autonomous vehicle] sometimes reaches out to Waymo Remote Assistance for additional information to contextualize its environment. The Waymo Remote Assistance team supports the Waymo AV with information and suggestions [...] Assistance is designed to be provided quickly - in a mater of seconds - to help get the Waymo AV on its way with minimal delay. For a majority of requests that the Waymo AV makes during everyday driving, the Waymo AV is able to proceed driving autonomously on its own. In very limited circumstances such as to facilitate movement of the AV out of a freeway lane onto an adjacent shoulder, if possible, our Event Response agents are able to remotely move the Waymo AV under strict parameters, including at a very low speed over a very short distance.

Tentatively, Tesla needs to meet the bar for autonomy that Waymo has set. But if there are edge cases where Tesla is close enough in spirit, we can debate that in the comments.

9. What about human safety monitors in the passenger seat?

Oh geez, it's like Elon Musk is trolling us to maximize the ambiguity of these market resolutions. Tentatively (we'll keep discussing in the comments) my verdict on this question depends on whether the human safety monitor has to be eyes-on-the-road the whole time with their finger on a kill switch or emergency brake. If so, I believe that's still level 2 autonomy. Or sub-4 in any case.

See also FAQ3 for why this matters even if a kill switch is never actually used. We need there not only to be no actual disengagements but no counterfactual disengagements. Like imagine that these robotaxis would totally mow down a kid who ran into the road. That would mean a safety monitor with an emergency brake is necessary, even if no kids happen to jump in front of any robotaxis before this market closes. Waymo, per the definition of level 4 autonomy, does not have that kind of supervised self-driving.

10. Will we ultimately trust Tesla if it reports it's genuinely level 4?

I want to avoid this since I don't think Tesla has exactly earned our trust on this. I believe the truth will come out if we wait long enough, so that's what I'll be inclined to do. If the truth seems impossible for us to ascertain, we can consider resolve-to-PROB.

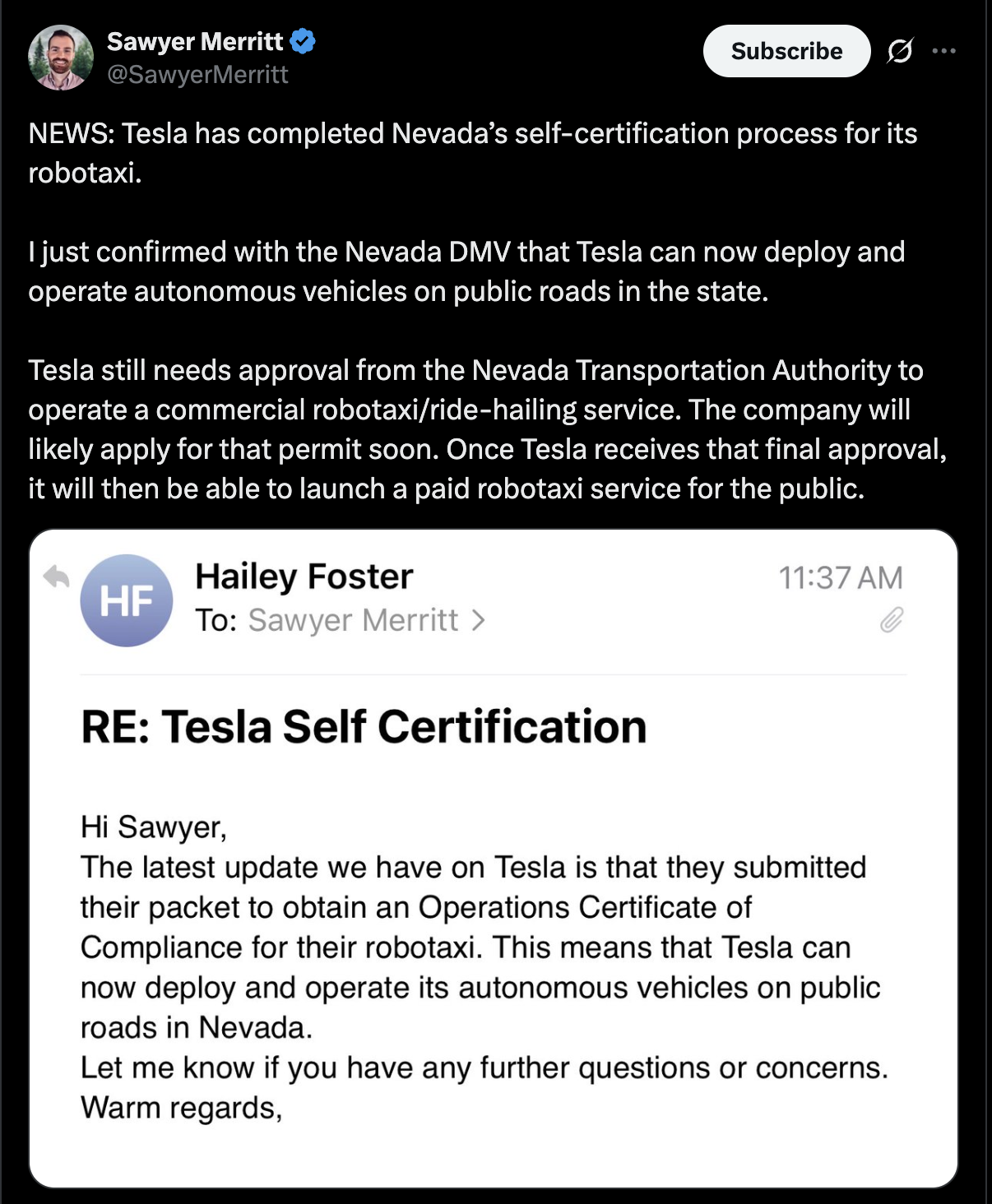

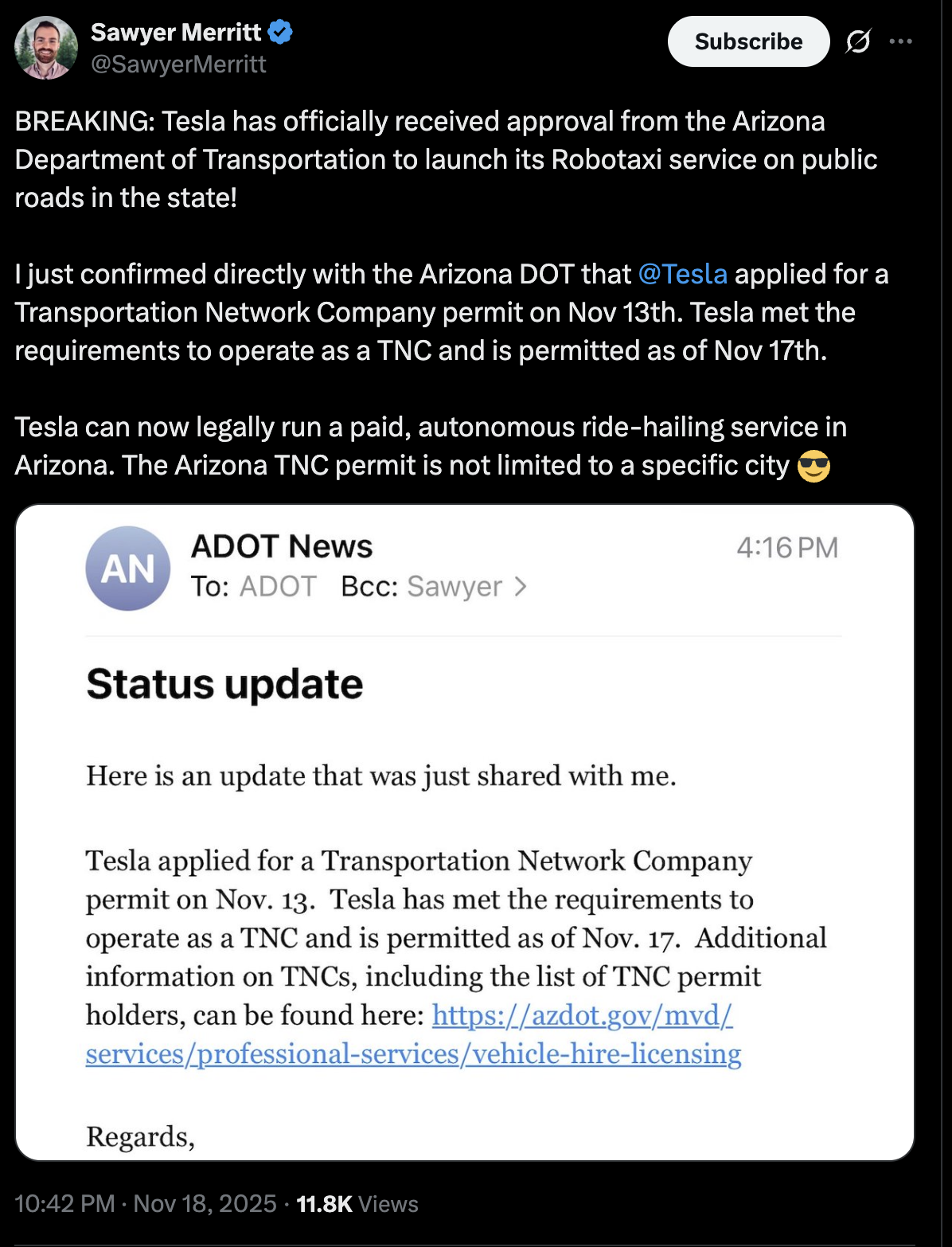

11. Will we trust government certification that it's level 4?

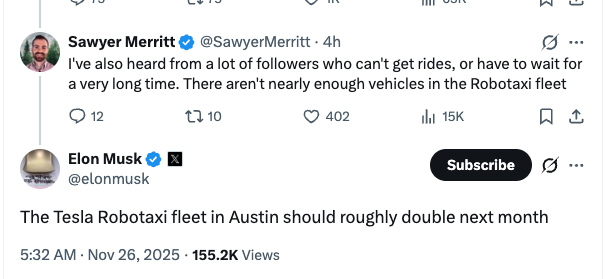

Yes, I think this is the right standard. Elon Musk said on 2025-07-09 that Tesla was waiting on regulatory approval for robotaxis in California and expected to launch in the Bay Area "in a month or two". I'm not sure what such approval implies about autonomy level but I expect it to be evidence in favor. (And if it starts to look like Musk was bullshitting, that would be evidence against.)

12. What if it's still ambiguous on August 31?

Then we'll extend the market close. The deadline for Tesla to meet the criteria for a launch is August 31 regardless. We just may need more time to determine, in retrospect, whether it counted by then. I suspect that with enough hindsight the ambiguity will resolve. Note in particular FAQ1 which says that Tesla robotaxis have to be becoming a thing (what "a thing" is is TBD but something about ubiquity and availability) with summer 2025 as when it started. Basically, we may need to look back on summer 2025 and decide whether that was a controlled demo, done before they actually had level 4 autonomy, or whether they had it and just were scaling up slowing and cautiously at first.

13. If safety monitors are still present, say, a year later, is there any way for this to resolve YES?

No, that's well past the point of presuming that Tesla had not achieved level 4 autonomy in summer 2025.

14. What if they ditch the safety monitors after August 31st but tele-operation is still a question mark?

We'll also need transparency about tele-operation and disengagements. If that doesn't happen soon after August 31 (definition of "soon" to be determined) then that too is a presumed NO.

Ask more clarifying questions! I'll be super transparent about my thinking and will make sure the resolution is fair if I have a conflict of interest due to my position in this market.

[Ignore any auto-generated clarifications below this line. I'll add to the FAQ as needed.]

Update 2025-11-01 (PST) (AI summary of creator comment): The creator is [tentatively] proposing a new necessary condition for YES resolution: the graph of driver-out miles (miles without a safety driver in the driver's seat) should go roughly exponential in the year following the initial launch. If the graph is flat or going down (as it may have done in October 2025), that would be a sufficient condition for NO resolution.

Update 2025-11-06 (PST) (AI summary of creator comment): The creator outlined how they would update their probability assessment based on three possible scenarios by January 1st:

World 1: Tesla misses deadline, safety riders still present, no expansion → probability should drop

World 2: Tesla technically hits deadline with some confirmed rides without safety riders, but no scaling up → creator would be suspicious this is a publicity stunt/controlled demo rather than meaningful evidence of level 4 autonomy

World 3: Safety riders gone in Austin plus meaningful expansion → would be a meaningful update on probability Tesla has cracked level 4 autonomy

Note: All three scenarios would still leave question marks about whether Tesla achieved level 4 by the August 31, 2025 deadline.

Update 2025-12-10 (PST) (AI summary of creator comment): The creator has indicated that Elon Musk's November 6th, 2025 statement ("Now that we believe we have full self-driving / autonomy solved, or within a few months of having unsupervised autonomy solved... We're on the cusp of that") appears to be an admission that the cars weren't level 4 in August 2025. The creator is open to counterarguments but views this as evidence against YES resolution.

Update 2025-12-10 (PST) (AI summary of creator comment): The creator clarified that presence of safety monitors alone is not dispositive for determining if the service meets level 4 autonomy. What matters is whether the safety monitor is necessary for safety (e.g., having their finger on a kill switch).

Additionally, if Tesla doesn't remove safety monitors until deploying a markedly bigger AI model, that would be evidence the previous AI model was not level 4 autonomous.

People are also trading

Elon admitting that they haven't quite solved full autonomy while @dreev is still trying to read tea leaves from last summer is very representative of this market.

Following the recent news about FSD being in a certification process in the Netherlands (https://x.com/KRoelandschap/status/1992971468162912495), Tesla is now doing FSD demos to clients in Europe. Here's one in Denmark https://x.com/andersbaek1/status/1999072654112747584

This seems like quite an admission from Musk, a month ago:

Now that we believe we have full self-driving / autonomy solved, or within a few months of having unsupervised autonomy solved... We're on the cusp of that -- I know I've said that a few times but we really are at this point and you can feel it for yourselves with the 14.1 release.

https://www.youtube.com/live/VGPlvmMjPtE?si=3J4tsW1Cr0Hdk3qj&t=1h42m30s

That's from the 2025 Tesla shareholder meeting on November 6th.

@MarkosGiannopoulos Exciting stuff, whatever the timeline. For this market, I'm open to counterarguments but this all sounds like Musk admitting the cars weren't level 4 in August?

@dreev Not sure there is something to "admit"

Musk, 2024 Q1: "Unsupervised autonomy will first be solved for the Model Y in Austin."

https://www.earningscall.ai/stock/transcript/TSLA-2025-Q1

@MarkosGiannopoulos What about back in June when Tesla delivered a car to a customer with no one inside it and Musk averred that that was "FULLY autonomous" (emphasis his)?

Or more to the point, this market is asking whether the Austin robotaxis this summer cracked unsupervised autonomy and Musk has been explicit that the answer was yes. I think this "on the cusp" and "within a few months" is a reversal.

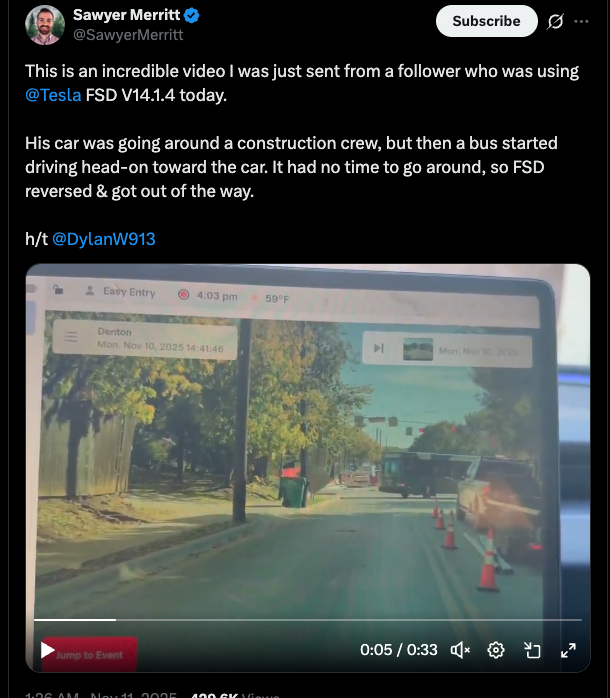

To be less cynical, I am starting to believe that they're getting close. The other day Musk actually tweeted that FSD version 14.2.1 will sometimes let you text and drive. To be particularly charitable to Musk, and as Musk argued (see 1h9m31s) in the shareholder meeting, people are currently turning off FSD in order to text while driving, so it's safer for FSD to be less strict about that.

(I have huge problems with just accepting that people text and drive in non-self-driving cars -- and everyone I know is conscientious about this -- but regardless, it's at least plausible that Tesla is saving lives on net at this point. Related aside: Tesla, in arguing that FSD is safer than human driving, cites graphs showing lower accident rates with FSD turned on vs off. I think that's apples and oranges. People turn on FSD specifically when it feels safe to do so, and disengage when it feels unsafe. I see similar skepticism about Waymo's safety numbers but Waymo actually goes out of their way to get apples-to-apples comparisons. There seems to be little doubt at this point that Waymos really are much safer than human drivers. I'm interested to hear more evidence that Tesla's FSD is safer than humans.)

@dreev "What about back in June when Tesla delivered a car to a customer with no one inside it and Musk averred that that was "FULLY autonomous" (emphasis his)?" - Yes, that was fully autonomous, but a singular event. If you count that as declaring "autonomy solved" then autonomy has been solved already months before that, as cars exiting the California and Texas factories drive themselves (no human inside) to the parking lot. https://x.com/Tesla_AI/status/1911525549920620580 But people generally discount this as "worthless" :)

"The other day Musk actually tweeted that FSD version 14.2.1 will sometimes let you text and drive." - This has happened. 14.2.1 appears to have relaxed rules. People have put it to the test https://x.com/ehuna/status/1998066141126959406 and some have gotten tickets, of course :D

"People turn on FSD specifically when it feels safe to do so, and disengage when it feels unsafe." - People are posting screenshots of doing 100% on FSD these days https://x.com/gohnjanotis/status/1997042620296544373

Re: The autonomous delivery being a singular event: The bearish take is that Tesla cheated. That could mean (a) they yolo'd it, counting on the low likelihood of a crash on a single short trip despite subhuman safety, (b) they ensured a clear path with lead vehicles, (c) they trained on that specific route, and/or (d) they had remote operators ready to take over or with their finger on a kill switch.

Re: Teslas self-navigating from factory to parking lot: This I believe, and have no particular accusations of misrepresentation. It's easier than arbitrary routes and normal speed in normal traffic but it's nothing to sneeze at! There are pedestrians to not kill, etc.

Re: FSD letting people drive and text: Agreed.

Re: 100% FSD drives: That screenshot is 500 miles. Definitely Bayesian evidence but we need to see more 9s. I talked about this a couple weeks ago on AGI Friday -- https://agifriday.substack.com/p/felicide -- and looking at the crowdsourced data again today, it looks like FSD is up to 1000-2000 or so miles between critical disengagements. Steady progress!

(But it sure makes me nervous about drivers getting lulled into complacency about watching the road.)

>"it looks like FSD is up to 1000-2000 or so miles"

Or is that down from 9000 miles when first critical DE was reported? 😭 🤣

Seems like they must have had at least 4 in fairly quick succession?

Of course it could just be random luck has now switched to them being less lucky from very lucky early on with v14? Could there be alternative explanations? e.g. have they realised some setting was under-reporting events that should be considered critical disengagements?

.

Single car delivery - seems like at best a car delivery service in its infancy rather than a "robotaxi service" which surely requires rides with paying customers in the vehicle?

.

New model that is an order of magnitude larger in Jan/Feb while before that new model 9th Dec plus about 3 weeks to remove safety monitors might be just before year end. Hmm. suspicious do you think? Will they really remove monitors while using v14 or will they, out of an abundance of caution, wait for this next much larger model?

If they only use a much larger model with no safety monitor, that doesn't seem like they were ready to do so by August 2025?

2000 miles or so doesn't seem enough to claim safer than human driver to me, I would prefer to see a number that is at least 50,000 miles. There is however a good possibility that not all of the criticalDEs would have resulted in crashes without human supervision. It is hard to judge the seriousness of a critical DE event without more information.

It seems the most objective thing remains that safety monitors were present so they weren't level 4 in summer 2025.

@ChristopherRandles I'm with you on everything here except your last sentence. Presence of safety monitors alone isn't dispositive. It depends on whether the safety monitor is necessary for safety, like by having their finger on a kill switch.

(If I'm contradicting anything in the FAQ, please yell at me. My comments here aren't official unless I update the FAQ!)

In any case, that's a great point about how, if Tesla doesn't remove the safety monitors until they deploy a markedly bigger AI model in the cars then that's evidence that the previous AI model was not level 4 autonomous.

But my original point at the top of this thread is that maybe Musk just flat-out admitted that the cars were not level 4 as of November 6th?

@dreev That last sentence you are disputing was probably badly worded. I do accept that it is possible to put a safety monitor in a level 4 car. If that was done, you wouldn't expect many interventions. We have seen videos of interventions and erratic driving. Maybe they had improved by the end of August but we don't have much if any data to confirm or disprove this. I think it is possible to argue that even if the cars were level 4 capable by the end August, Tesla hasn't launched the level 4 robotaxi service until they do remove the safety monitors since that is a key feature of level 4. Whether the service was launched (past tense) is a different matter than whether software in use at end of August was level 4 capable.

We believe we have been told the robotaxis were using v14 in late June while Tesla owners were still on v13. I am tending to assume robotaxis used v14.0.x and it is possible they moved on to v14.1 by the end of August but we don't know this one way or the other. They might even have completely different numbering sequences for robotaxis from tesla owners. On the tracker v14.1 was in use 7th to 12 October. v14.2 does not appear on the tracker until 21 Nov.

If the robotaxis had moved on to 14.2 by the end of August it would seem strange to have rolled out v14.1 through 14.1.7 to tesla owners in October and November rather than going more directly to v14.2? So I don't believe the the robotaxi are likely to have moved on to v14.2 or equivalent by end of August.

Of course you can have minor updates to level 4 software, so just the existence of later versions don't prove that v14.1 and 14.0.x were not level 4 capable. I would however suggest there is more than just the existence of the later versions:

There are reports of regulatory investigations into erratic driving.

If the software at the end of August was fully level 4 capable would tesla take 4 months to remove safety monitors. Some caution and a month or two before removing them sure. Maybe regulatory investigations caused this to stretch to 4 months? Feels more like there are a few more issues and they are nearly there now but not at end of August?

Re Musk admitting it. Well you did say you didn't want to trust Tesla / Musk but maybe that was meant to be one way only? ie if Tesla claimed level 4 capable you didn't want to trust this but if Musk says it is very nearly there now then we can conclude it wasn't there at end of August. Would seem quite reasonable to me to treat it as one way only like that but then it does suit me to reach that conclusion.

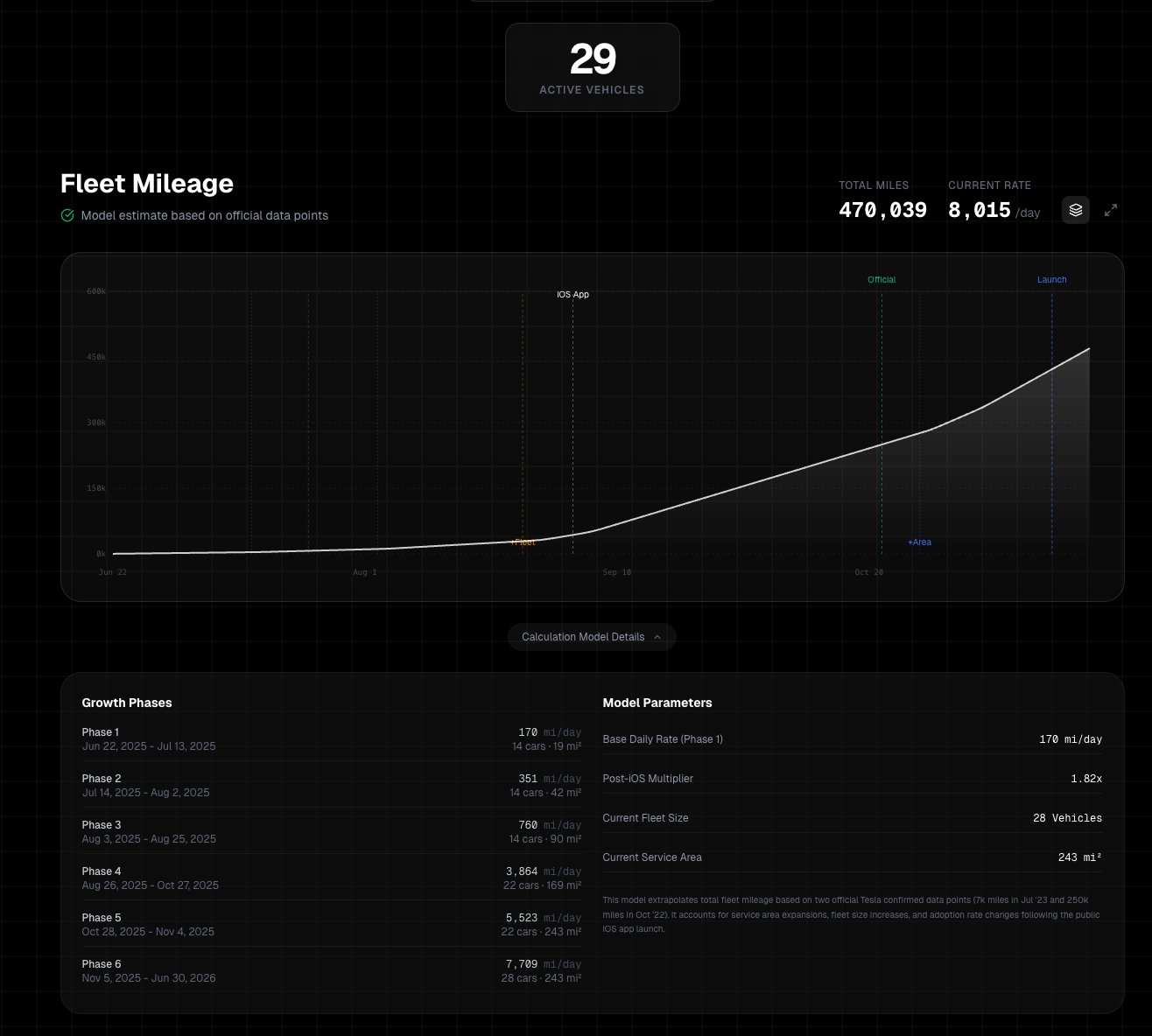

An unofficial tracker (of people counting license plates!) puts the number of Robotaxi in Austin to 29 https://x.com/ethanmckanna/status/1992408024804434281

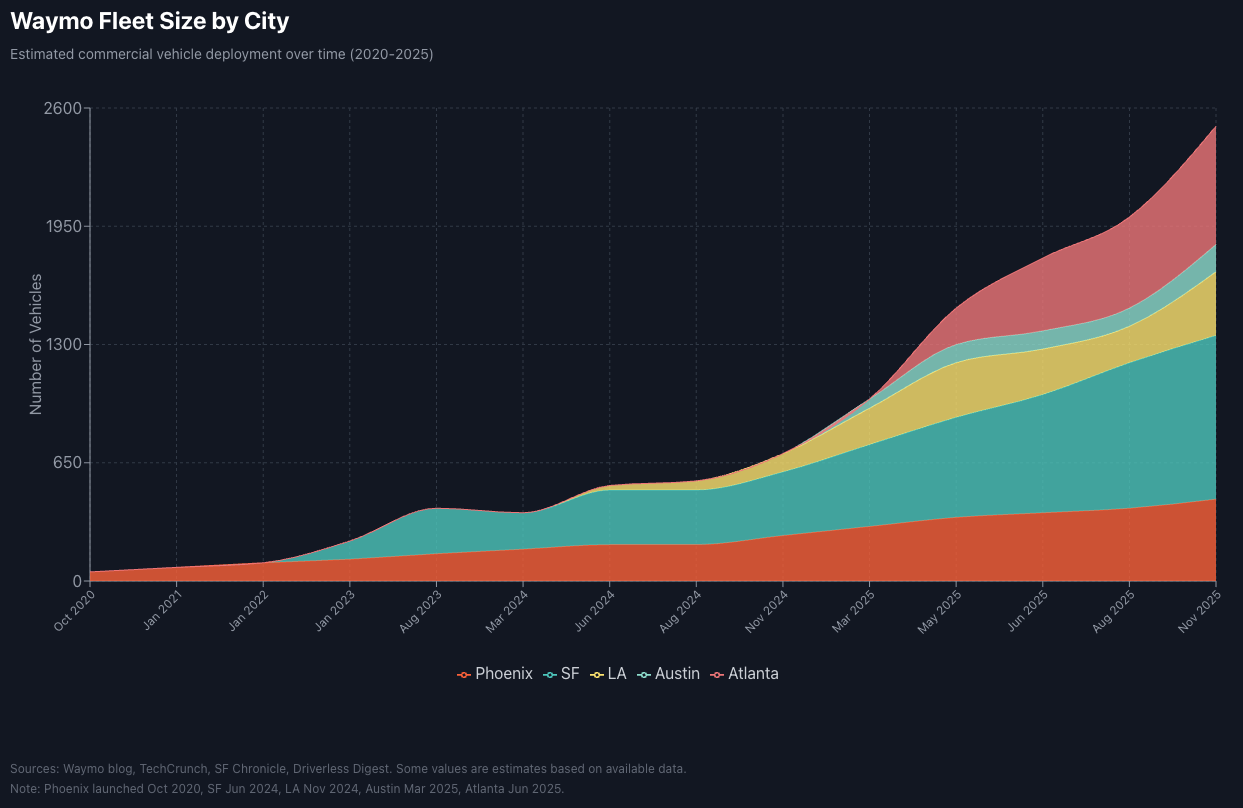

Waymo count: 92 https://waymo.watch/

@MarkosGiannopoulos Looks like waymo.watch shows zero for multiple cities they've launched in. We do know there are over 100 Waymos in Austin, but maybe not a lot more? Here's what Claude 4.5 Opus thinks:

FSD handling Canadian snow https://x.com/SawyerMerritt/status/1988056514511380540

Seems like Tesla is ahead of Waymo on some parts of this autonomy thing.